Parameter files are usually read in JAMS with the StandardEntityReader component which is part of the J2000 repository. This component interprets all data as Doubles or String values. It was not possible to read other types of data with this component. Since parameter files consists almost entirely of numeric data, this is usually no problem. An problem arises if the data should be passed to a component which expects a different data type such as an integer.

This happens for instance when sub-catchments should be linked to specific runoff measurements. Assume a parameter file of sub-catchments.

#gauged subcatchments.par ID gauging station subBasinIDs obsQcolumn ... 0 Arnstadt [91,92,93,94,98,99,100] 1 1 Gehlberg [98,99,100] 2 2 Graefinau Angstedt [64,65,66] 3 ...

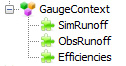

Each sub-catchment is characterized by an ID, the name of its gauging station, a number of sub-sub-catchments and a column. The column id links the sub-catchment to an observed runoff time-serie in an additional file. This parameter file can be read with a StandardEntityReader into an EntityCollection, which we will name “gauges”. A simple SpatialContext can be use to iterate over all gauges and to calculate for each gauging station the simulated stream-flow and the corresponding efficiency measures. This can be done with the following sub-model:

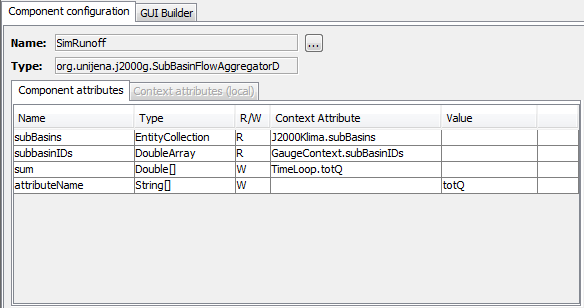

The GaugeContext iterates over all sub-catchments. For each sub-catchment the simulated runoff is computed with a SubBasinFlowAggregator. This component sums up the runoff from several sub-sub-catchments into a total runoff volume which is stored in the attribute totQ.

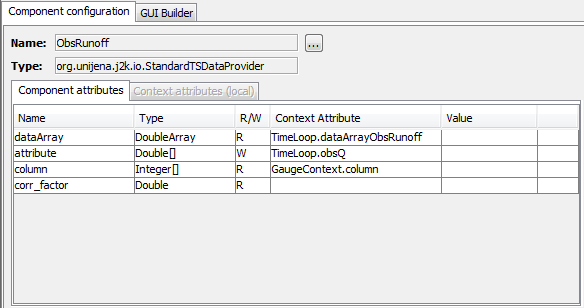

The obsRunoff component is an instance of StandardTSDataProvider. This component selects a specific index within a DoubleArray. We pass the column id from the parameter file to get the desired runoff data for the specific sub-catchment and store it in an obsQ attribute.

With totQ and obsQ the Efficiency of the model for each sub-catchment can then be calculated.This approach works fine except for one thing. StandardTSDataProvider expects an integer while the parameter file provides a double value. There are several possible solutions to this problem:

- The column id can be converted from double to integer. Therefore a new component is needed, which is not yet existing.

pro: This component may be useful for several other issues.

con: The model will be enlarged by components converting attributes to one type or another. - Writing an additional StandardTSDataProviderDoubleVersion component, which accepts double values for the column index.

pro: it works

con: This will create a new component, which is only slightly different to the existing one. This will make blow up the components repository and make things difficult to maintain.

The real problem is the EntityReader which cannot read other types then doubles. For this reason I added type support to the EntityReader. Since version 3.0_b12 you can add a

#TYPED

comment to your parameter file. This will tell the EntityReader to expect an additional line in the header of the parameter file, which defines the type of the data. Allowed types are

Double, DoubleArray, Float, FloatArray, Integer, IntegerArray, Long, LongArray, String, Calendar, TimeInterval, Boolean and BooleanArray.

The new parameter file will look like that. Now you can build up the context as shown in the first figure without any problems.

#gauged subcatchments.par #TYPED ID gauging station subBasinIDs obsQcolumn Double String DoubleArray Integer ... 0 Arnstadt [91,92,93,94,98,99,100] 1 1 Gehlberg [98,99,100] 2 2 Graefinau Angstedt [64,65,66] 3 ...